Essay: Assessing machine sentience

Published on 23 June 2022 by Andrew Owen (14 minutes)

I’ve long been interested in the field known as artificial intelligence (AI). Today, I prefer the term machine learning (because we understand so little about what intelligence really is that we don’t know how to simulate it). Following the claims of a Google engineer that its LaMDA project has achieved sentience, I thought it was worth dusting down an essay I wrote on the subject when I was an undergraduate (before neural networks and big data went mainstream).

“It’s a lot easier to see, at least in some cases, what the long-term limits of the possible will be, because they depend on natural law. But it’s much harder to see just what path we will follow in heading toward those limits.”—K. Eric Drexler

In his 1986 book Minds, Brains and Science, John Searle says: “As computers become more and more complicated, it becomes harder and harder to understand what goes on inside them. With gigabytes of RAM and access to databases comprising almost the whole of human knowledge, it isn’t inconceivable that a research project could go berserk… a virus could blossom beyond its creator’s wildest imagination… a program designed to unify information might begin to learn from the information that it has compiled”

This view of machine intelligence has gained wide appeal in an audience unaware of the realities of technology, fed on cyberpunk science fiction and paranoid about computers taking over the world. In the book, Searle is primarily concerned with disproving this notion of thinking digital computers (as apart from other types of computers, possibly yet to be invented). Part of the problem of deciding whether or not computers are capable of thought lies in our lack of understanding of what thought actually is. Thus, his first theme is “how little we know of the functioning of the brain, and how much the pretension of certain theories depends on this ignorance.”

In a round about way Searle does in fact include other types of computers. He has asserted that a perfect copy of the brain that looks and works the same way is only a simulation. Searle admits that the minds of the title can’t very well be defined, that it is still not completely clear how brains work, and that any discipline with the word science in the name probably isn’t one. Notably, one that to all intents and purposes is, is computer science.

Searle begins by defining the mind-body problem. Physics Nobel Laureat Roger Penrose says: “In discussions of the mind-body problem, there are two separate issues on which attention is commonly focused: ‘How is it that a material object (a brain) can actually evoke consciousness?’; and conversely; ‘How is it that a consciousness, by the action of its will, can actually influence the (apparently physically determined) motion of material objects?’” Searle adds the question, “How should we interpret recent work in computer science and artificial intelligence – work aimed at making intelligent machines? Specifically, does the digital computer give us the right picture of the human mind?”.

Searle rejects the dualist view of the physical world, governed by the laws of nature, being separate from the mental world, being governed by free will. He defines four mental phenomena that make up the processes of the brain as consciousness, intentionality, subjectivity, and causation. He says: “All mental phenomena… are caused by processes going on in the brain… Pains and other mental phenomena are just features of the brain”. By this he means that all experience takes place in the mind, as distinct from the external, physical message. He gives the example of a patient who undergoes an operation. He is under anaesthetic and hence in his view of reality he suffers no pain from the surgeon’s knife. The physical action is there, but the mental consequence is suppressed.

Searle deals with the four requirements for an analysis of the mind in turn. He explains that consciousness involves “certain specific electrochemical activities going on among neurons or neuron -modules”. He explains that intentionality, drives and desires, can to a degree be proven to exist (in the case of thirst for example). He shows that subjective mental states exist “because I am now in one and so are you”. And he shows that in mental causation, thoughts give rise to actions.

This brings us back to Penrose’s second question. If the mind is software to the body’s hardware, then it’s not so difficult to see how mental causality works. Neither the mind nor computer software have a tangible presence. The mind is held in the matrix of the brain as software is held in the ‘memory’ of a computer. Yet, a thought can result in an action, such as raising an arm because physical signals are sent as software would send physical signals through a computer to raise the arm of a robot. An extension of this thinking gives rise to the notion of the possibility of computer intelligence.

The running theme of the book is Searle’s attempt to counter the claims of proponents of strong AI that computers can be taught to think. It can be easily refuted if you accept that humans are not taught to think, but advocates of machine intelligence say they are teaching computers to learn. AI is seen by them as the next step in the evolution of the computer towards the ultimate goal of consciousness. Searle says that the digital computer as we know it will never be able to think, no matter how fast they get or how much ‘memory’ they have.

In the summer of 1956, a group of academics met at Dartmouth College to explore the conjecture that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” The conjecture was formulated by John McCarthy, and the field of enquiry it engendered came to be known as artificial intelligence. The problem as Searle sees it is that the features of intelligence can’t be precisely defined by computer experts in the AI field who end up ignoring at least one of the four mental states to get their programs to work. These programs then gain credibility by psychology’s use of them to describe the behavior as the human mind. Searle points out that when the telegraph was the latest technology, it was thought that the brain worked like that.

“There are two ways of thinking about how a machine might approximate human intelligence. In the first model, the goal is to achieve the same result as a human would, although the process followed by a machine and man needn’t be the similar. (This model of shared result, but distinct process describes how computers do arithmetic computation.) In the second model, the goal is not only for the computer to achieve the same result as a person, but to do it following the same procedure.”

Advocates of strong AI believe that given the same information as a human, a computer, following a set process, could derive the same meaning. However, Searle argues there is no distinct process in the human mind for a computer to simulate, and therefore a computer can’t have meaning, one of the five components of human language. “In the case of human beings, whenever we follow a rule we’re being guided by the actual content or the meaning of the rule.”

“Language isn’t so much a thing as it is a relationship. It makes no sense to talk about words or sentences unless the words and sentences mean something, For sentences to mean something, their components must be linked together in an orderly way. A linguistic expression must be encoded in some medium—such as speech or writing—for us to know it is there. And there must be people involved in all this to produce and receive linguistic messages.

Thus, there are five interrelated components that go to make up human language: meaning (or semantics), linkage (or syntax), medium, expression, and participants.” Searle argues that there can be no meaning in a computer’s understanding of language, because it relies on some form of judgement or opinion. It could further be argued that what the computer receives isn’t in fact a linguistic message but an instruction on how to react.

To some degree you can play around with syntax: ‘Grammar rules break you can; understood still be will you.’ The ‘meaning’ is conveyed, and perhaps a computer that looks at individual words could still derive the ‘meaning’. However, if we change the sentence to: ‘Can you break grammar rules; will you still be understood?’ then the ‘meaning’ has changed. The words are the same but the order, and meaning, is different. The first is a statement, and there are languages which have this grammatical form such as Tuvan, while the second is clearly a question. How can a computer derive the ‘meaning’ if we don’t include the ‘question mark’.

Searle has previously put this idea forward in his Chinese room argument. Chinese characters are passed into a room, where a non-Chinese speaker follows a set of rules and comes up with a response that’s passed out. Searle argues there is no mind in the room. Advocates of strong AI claim the mind works like the room. This doesn’t seem to be the case if we stick to Searle’s four mental states. If this were the case, then Charles Babbage’s Analytical Engine (a mechanical computer) would, if constructed, have been capable of thought. The argument about the digital computer is that technology doesn’t matter, as in a car, you can change the engine, but it still works the same way.

This can be seen in William Gibson and Bruce Sterling’s novel “The Difference Engine”, where the computer age arrives a century ahead of time. In this version of history Babbage perfects his Analytical Engine and the steam driven computers of the industrial revolution include “calculating-cannons, steam dreadnoughts, machine guns and information technology”.

As Searle points out, computers follow rules. We don’t have any rules that we follow. If we did, then every human being would look and function the same. One brain would be a carbon copy of another. As all brains are unique (especially in the case of identical twins) it is clear that there is no hard and fast rule for building them. They grow. How can you grow a digital computer?

John McCarthy believes that his thermometer has feelings. If you look at the human body as a machine and the mind as part of that machine, then it seems clear that machines can’t have feelings as they have no ‘sense receptors’. They may have input devices including audio, video, heat-spectrum or whatever. The point being that the data is converted into something usable at the point it is taken in. In the human brain, input comes directly to the brain and is interpreted there. As Searle points out, if someone punches you in the eye then you ‘see stars’ it’s information processed in a visual way. No machine is rigged like us. Nor does Searle believe it ever will be. And, even if it was, he still believes it would be a simulation, not a true consciousness. After all, someone has to tell the computer that if it bumps into a wall it hurts because actually when it bumps into a wall it doesn’t hurt it at all. However, you could conceivably build a mobile computer that would feel a ‘pain’ response if it suffered damage. We already have self analyzing and repairing computers. This could logically be extended, but self-awareness is only one aspect of consciousness.

Self-described postmodernist feminist author Kathy Acker says: “When reality—the meanings associated with reality—is up for grabs, which is certainly Wittgenstein’s main theme and one of the central problems in philosophy and art ever since the end of the nineteenth century, then the body itself becomes the only thing you can return to. You can talk about any intellectual concept, and it is up for grabs, because anything can mean anything, any thought can lead into another thought and thus be completely perverted. But when you get to the actual physical act of sexuality, or of bodily disease, there’s an undeniable materiality which isn’t up for grabs. So it’s the body which finally can’t be touched by all our skepticism and ambiguous systems of belief. The body is the only place where any basis for real values exists anymore.”

Perhaps this is the summation of the mind-as-a-computer/body problem. In Japan, a great deal of work has been done to achieve a fifth generation of computers with natural languages and heuristic learning capabilities. However, in the main they have been unsuccessful. “Japan has no advantage in software, and nothing short of a total change of national character on their part is going to change that significantly. One really remarkable thing about Japan is the achievement of its craftsmen, who are really artists, trying to produce perfect goods without concern for time or expense. This effect shows, too, in many large-scale Japanese computer programming projects, like their work on fifth-generation knowledge processing. The team becomes so involved in the grandeur of their concept that they never finish the program.”

This isn’t just the problem of Japan but of computer scientist in general who are approaching the problem from the wrong direction. You can’t craft a brain, children learn to walk and talk without being taught, so any computer that computer scientists hope to bestow with intelligence must have a natural learning program. Unfortunately it is still not clear how the concepts of speech are learned and so the future of machine intelligence looks unpromising.

People often ascribe an ‘intelligence’ to (mostly) inanimate objects. The car is a classic example, but ships have been called ‘she’ for years. It is easy to see why when writing this essay, for instance, and suffering a ‘crash’ resulting in losing two pages, and many hours of work one is likely to consider the machine ‘evil’ or ‘out to get one’. This is clearly not the case. However, because people generally don’t understand what happens inside the ‘box of tricks’ as with the Chinese Room, it is possible for them to ascribe an intelligence when there isn’t one there. It is also because mankind has become so dependent on technology that paranoia of machines taking over the world has come about.

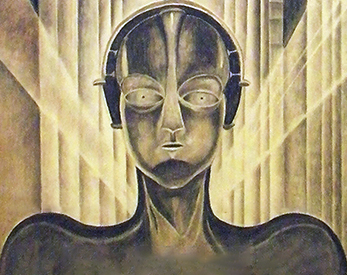

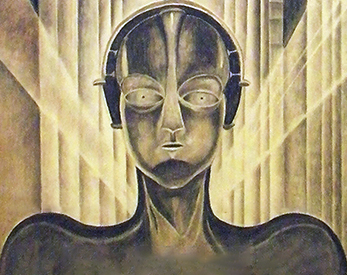

It doesn’t help the situation when it is fuelled by cyberpunk fiction. The classic text is Gibson’s “Neuromancer” where a network of computers achieves a single intelligence. This idea is purely fictional, but the other public image is more interesting. It derives from the film “Blade Runner”, and it is of course based on Philip K. Dick’s book “Do Androids Dream of Electric Sheep?”. One of the themes of the book not wholly represented in the film is the idea of the importance of owning an animal. However, because it is a post-apocalyptic society, most of the animals are dead. While there is a prize on owning a real animal, most people make do with artificial ones. They happily simulate the actions of sheep, cats, frogs or whatever but that’s all. They don’t think.

An interesting question is raised by the film. At the end when the replicant Batty is dying, he says, “I’ve seen things you people wouldn’t believe; attack ships on fire off the shores of Orion. I’ve watched sea beams glitter in the darkness at the ten house gate. All those moments will be lost in time, like tears in rain.” This raises a philosophical question, “If an android with a computer brain can have ‘experiences’ that he can relate to others and yet is mortal, then does he not have inalienable human rights?’

One area where computer simulations of intelligence consistently fail is in producing new creative data. In the area of humor for instance. It is probably impossible to program a computer to make random connections. This is, in part, because there is no such thing as randomness. Einstein’s theory of relativity can be seen to apply to every thought in the human brain as having derived in some way from a previous thought or external stimulus. Let us take an example where a computer would have difficulty; the sick joke. Computers don’t know what is in poor taste because they don’t have ‘taste’. A computer may be told that a joke about ethnic minorities is in poor taste, but it would not be able to come up with a new joke of its own in similar poor taste. Socially this is a ‘good’ thing, but in terms of simulating the human mind it is very ‘bad.’

Finally, we return to Wittgenstein, who sums up Searle’s overall argument when he says: “Meaning is not a process which accompanies a word. For no process could have the consequences of meaning.”