Art and Artificial Intelligence

Published on 2 November 2023 by Andrew Owen (10 minutes)

It is a truth universally acknowledged, that a single technology in possession of sustained media attention, must be in want of government legislation. And so the governments of 28 nations including the US, India and China came together to sign the Bletchley Declaration on artificial intelligence (AI). It’s named after Bletchley Park, where Alan Turing worked as a code breaker (and my maternal grandmother worked as an army motorcycle dispatch rider) during the Second World War. Turing famously went on to invent the Turing test as a measure of AI; could a machine fool a human into thinking it too was human. Arguably, that test was passed in 1989 when a student at University College Dublin spent an hour and 20 minutes arguing with an abusive chatbot called MGonz.

Science fiction writer Arthur C. Clarke’s third law states: “Any sufficiently advanced technology is indistinguishable from magic”. We are living in an era where the public understanding of the technology we have built our society on is incredibly limited. To many, AI is not only indistinguishable from magic, it is indistinguishable from human intelligence and creativity. We really need to do a better job of educating people. But Collins Dictionary has named AI as its word of the year, so perhaps we’ll get there sooner rather than later. The stated aim of the Bletchley Declaration is to boost global efforts to collaborate on AI safety. The idea of intelligent computers going rogue has been around since the 1960s, but was popularized by the 1984 film “The Termniator”. But are the fears justified?

Twenty years ago, philosopher Andreas Matthias asked the question: “Who is responsible if AI does harm?” We still don’t have an answer. Consider Phillipa Foot’s 1967 moral philosophy thought experiment, commonly known as “the trolley problem”:

There’s a runaway trolley (tram) headed straight for five people tied up and unable to move. You are stood next to a lever. If you pull it, the trolley will switch to a side track but there is a person on it. You have only two options:

1. Do nothing, and five people die. 2. Pull the lever, and one person dies.

Which ever choice you make, you are responsible for your decision. The autonomous vehicle equivalent of the trolley problem is that the brakes have failed and proceeding will kill a family of five in the car ahead, but going off-road will only kill the sole occupant of the vehicle. The AI lacks a conscience. It can only follow its programming. But if that programming was arrived at through machine learning, rather than a direct instruction, then it is unclear where legal responsibility lies for the AI’s decision. This will require legislation, and it will likely take several attempts to get it right.

Currently, the AI that you are most likely to encounter is of the large language model (LLM) variety. The current generation easily pass the Turing test, but they are not sentient. Until you provide input, no processing is taking place. Impressive as they are, LLMs are simply an advanced natural language processor, coupled to an advanced predictive text generator. The big breakthrough in natural language processing is that the models stopped trying to parse a word at a time, and instead work with blocks of text. This is what makes machine translation so much better than it used to be. But these tools are bounded by their datasets. Translation from French to English is easy because there’s a large data set of texts published in both languages. But if you are translating from an uncommon language into another uncommon language, English will be used as an intermediate step and the accuracy will go down. No increase in processing power will address this. It can only be fixed by increasing the size of the data set. This will happen over time as humans provide feedback on bad translations.

The next step in natural language processing was in assigning weight to words and mapping them in relation to other words. These mappings occur in hundreds of dimensions; the LLM. This enables the AI to interpret your commands, and generate output. But the output typically happens a word at a time. Based on your query, it generates the first word. And then it determines which word is likely to follow. It will iterate until your query is resolved. But there is a limit to how many words it keeps in its buffer. This might be 2048 words. When it gets to generating the 5,000th word, it will have forgotten more than half of what came before. There is no intent, and no morality. Depending on the data set it will give you plausible sounding untruths, or in the worst case, racist screeds. In fact, a great number of humans are currently employed in trimming data sets to filter out inherent biases. To paraphrase Tom Lehrer: data sets are like a sewer; what you get out depends on what you put in.

As an aside, the best use case I’ve found for LLMs right now is in generating plausible sounding wrong answers for multiple-choice questions when given the correct answer as input. Aside from the generated content being wrong and potentially offensive, another consideration is that works created in this way are probably not be protected by copyright law. You may recall the case of David Slater, who unsuccessfully tried to claim copyright over selfies taken by macaque using his equipment. In December 2014 the US Copyright Office stated that works created by a non-human are not protected by copyright.

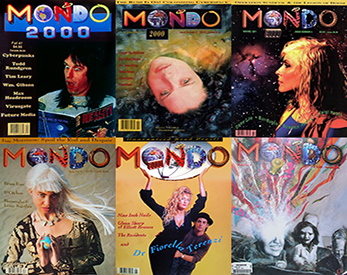

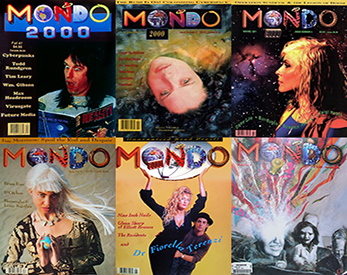

And now I have to address the elephant in the room: appropriation. I have read a lot of opinion on this topics by computer scientists who lack a fundamental understanding of art, and artists who lack a fundamental understanding of computer science. A polarized debate is no debate at all. It’s just two sides shouting at each other and calling each other names. It’s a shame Arnold Hauser isn’t around to write a fifth volume of “The Social History of Art”, which might be subtitled: “Postmodernism, The Digital Age”. I think there’s an argument that Bart Nagel invented “AI art” at Mondo 2000 in the 1990s. Let me explain.

The artists complain that AI art is just a “collage” of existing works, in particular their works, which they own rights to, that have been used without their consent. It’s not quite that straightforward. The art models are similar to the LLMs in that they model tagged images to create a dataset. But they don’t store the actual images. The computer scientists say that human artists have the whole of art history to draw on and that the AI is no different. They are also wrong. Art is created with intent, and AI has no intent. The computer scientists argue that the intent comes from the instructions given to the AI by the human. But the AI has no creativity. It just uses natural language processing to examine the query and then a deterministic approach to generate something based on its dataset. It lacks originality. The same criticism holds true for generative text. Remember the sewer: banality in, banality out.

Hauser wrote:

Post-impressionist art is the first to renounce all illusion of reality on principle and to express its outlook on life by the deliberate deformation of natural objects. Cubism, constructivism, futurism, expressionism, dadaism and surrealism turn away with equal determination from nature bound and reality-affirming impressionism.

You could plug the complete history of human art before Picasso into your dataset. But no matter what query you submitted, AI art would not be able to produce “Guernica”. But back to Nagel.

About a decade ago, with help from eBay and an obscure used book store in San Francisco (with a business card drawn by Robert Crumb), I collected the complete set of “Mondo 2000” magazine. The successor to “High Frontiers” and “Reality Hackers”, it was an independently financed magazine published in San Francisco from 1989 to 1998. There were 17 issues in all and a book, A User’s Guide To The New Edge, which Albert Finney can be seen reading in Dennis Potter’s Karaoke. It was published sporadically during much of its life and whenever I happened to be in Forbidden Planet in Cardiff, and they happened to have a new issue in stock, I bought it. You may have heard of it in its new incarnation as a blog. You’ve also probably heard of WiReD magazine (started in San Francisco in 1993 and featuring a lot of the same writers). The main difference between them was that, to the best of my knowledge, Mondo never carried a three page fold-out advert for a Lexus.

As an aside, Mondo’s editor fairly accurately predicted the world we’re now living in:

“The techno-elite are perhaps the only group advantaged by the new economy. They will be the new lords of the terrain in a Dickensian world of beggars and servants. Just because they think of themselves as hipsters doesn’t mean we should expect them to share the wealth.”—R. U. Sirius

Mondo’s art director, photographer Bart Nagel, made heavy use of Photoshop collage to create the look of the magazine. Nagel had to do the legwork of finding the source material and digitally composing it. But like today’s AI art, he was bound by the dataset. Mark Penner-Howell defines appropriation thus:

Appropriation in art is the use of pre-existing objects or images with little transformation applied to them. In the visual arts, to appropriate means to properly adopt, borrow, recycle or sample aspects (or the entire form) of human-made visual culture. Inherent in our understanding of appropriation is the idea that the new work re-contextualizes whatever it borrows. In most cases the original ‘thing’ remains accessible as the original, without significant change.

He notes that “collage” was first used to describe works by Braque and Picasso which appropriated found materials including newspapers, magazines, sheet music, wall paper samples and so on. Nagel’s innovation was to replace glue with digital compositing. Although AI art isn’t literally sticking images together, I still think it’s not entirely unfair to consider it a form of collage. But wait, does that mean I consider it art? I think we have to. Instructing an AI to composite an image from its dataset is as valid an expression of art as what Nagel was doing. Although proving copyright may still be a challenge. And if the generated image is substantially unchanged from a reference work, then existing copyright laws provide protection to the original artist. But is it good art? Well, that’s a matter of taste.

But I would argue that the area where AI is having the biggest impact is in healthcare. The ability to apply machine learning (ML) to vast datasets has huge implications for drug discovery, diagnosis and crisis management, to name a few. This week, it became public knowledge that the Apple Watch was originally developed by a secretive Apple spin-off called Avolonte Health with the aim of including a non-invasive glucose monitor. That’s still a dream rather than a reality, but Apple VP of processor architecture Tim Millet has now been assigned to oversee the project. So it shouldn’t be a surprise that the S9 chip in the latest Apple Watch has four ML cores.

We’re in the middle of a revolution that could have as profound an impact on people’s lives as the internet. But if we look at how the internet developed, often it was more by luck than judgement. Like Barbie or nuclear fission, AI is a neutral. It can be used for the greater good or to cause great harm. If we’re going to make the right choices, we need to make sure our policy makers are informed. Thus far they haven’t done a great job of listening to the climate scientists, but hopefully the next generation will do a better job.

Today’s blog also coincides with the release of the final track by The Beatles. It’s based on an original mono cassette recording made by John Lennon in the 1970s. It includes guitar parts recorded by George Harrison in the 1990s and new contributions from Paul McCartney and Ringo Starr. The surviving members of the group originally attempted to do something with it during the Beatles Anthology project, but it was considered impossible because the of the audio level of the piano in relation to Lennon’s voice. But machine audio learning (MAL) software developed by Peter Jackson’s team for the 2021 “Get Back” documentary enables demixing to completely isolate individual voices and instruments.

Image: Mondo 2000 covers (issues 1 to 6). Appropriated from Bart Nagel.